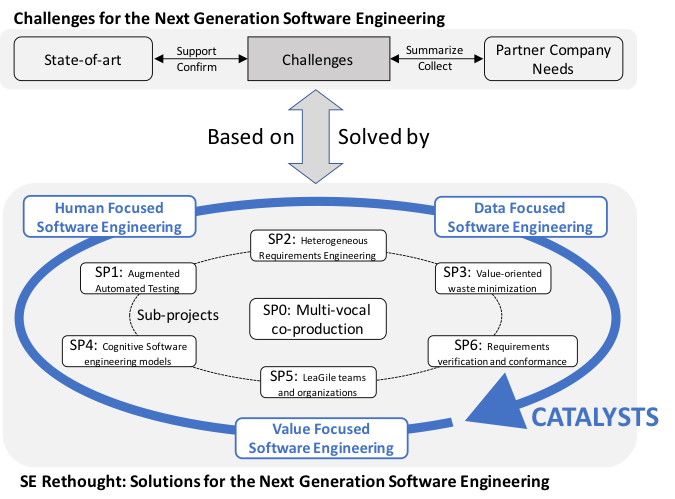

SERT Research Sub-projects

SP1: Augmented Automated Testing: leveraging human-machine symbiosis for high-level test automation

This project aims to address questions associated with QA efficiency and research an approach that combines human best practices, advances in system intelligence, and state-of-art human-machine interaction into augmented automated testing. The keyword augmentation regards change, enhancement and evolution of tests, tools and test data visualization to aid either the human to understand test automation output, or the machine by prompting the human to steer automation. This will serve the purpose of maximizing the utilization of human cognitive ability during exploration- and exploitation-based testing, whilst enabling the machine to more effectively automate repetitive SUT regression testing through prompted input from the human, what we refer to as Human-Machine symbiosis. This is an extension of concepts that have only partially been evaluated in for instance search-based software engineering research [102]. The ultimate goal of the sub-project are semi-automated, trustworthy, easy-to-use, graphical systems that enable human-machine symbiosis where the human feeds the systems with knowledge that allows the test systems to give better support to the human operator, which gives the test systems improved input from the human in iterations.

To initialize this sub-project, a longitudinal collaborative study with several industry partners will be performed utilizing an augmented automated testing (AAT) tool – EyeScout . This tool represents a suitable platform on which research can be performed to help answer several of the sub-project’s main research questions. The tool combines image-recognition based software testing, also known as Visual GUI Testing [96], [103] with dynamic modelling, based on recorded user interaction at the GUI level to support user guidance and automated, user-emulated, regression testing of functionalities as well as non-functional attributes.

In summary, this project will focus on the development and evaluation of a new test approach (AAT) that can leverage new and advanced automated technologies in addition to cognitive exploration to make testing more accurate, efficient and effective and thus support the market’s needs for faster delivery and higher quality software.

SP2: Heterogeneous multi-source requirements engineering

In traditional software engineering, business analysts identify user or market needs (intelligence), synthesize a set of features and functions that satisfy those needs as a requirements specification (design), and prioritize and package these requirements based on business strategies and constraints (choice). This process is highly inefficient as it funnels a small amount of information on the users’ needs from a limited set of users (selected by business analysts) through a limited capacity process, resulting from mostly manual effort constrained by increased time pressure [104]. Companies are currently exposed to large amounts of information and data originating from business intelligence, product usage data, reviews and other forms of feedback, e.g. by utilizing the requirements acquired from Crowd-based engineering principles [105]. The amount of data and its heterogeneous, multi-sourced nature challenges requirements identification and concretization and creates demands for revisiting software design and development activities. A growing trend is also that substantial amount of this data is generated by machine-learning components integrated into software products that are self-adaptive (e.g. systems with deep learning algorithms). This means that these software products not only continuously provide data about the changing environment, but also self-adapt and change their behaviour based on contextual fluctuations (so called non-deterministic behaviour). However, albeit providing many substantial benefits, this machine learning trend greatly contributes to overloaded requirements management [106] and presents a need for the inception, realization and evolution phases of software systems development to be supported by efficient data acquisition and analysis approaches to enable decision-centric processes [32]. In such a process, each process phase becomes data-intensive and can be seen as a three-step process that consists of: i). data collection and problem formulation (intelligence); ii) development of alternatives (design); and iii) evaluation of alternatives (choice) [107]. For example, data-intensive requirements inception involves: identifying relevant data sources, filtering relevant information from non-relevant (early requirements triage [108], and identifying requirement abstractions or features [109]. Data-intensive requirements evolution involves semi-automated analysis of product usage data and user feedback [105], aggregating and prioritizing these opinions and presenting them for decision-makers who decide what to focus on as well as what features and non-functional aspects constitute value and what type of value [110].

SP3: Value-Oriented Strategy to Detect and Minimize Waste.

Software development organizations are working under continous time pressure and strict deadlines [9] that sometimes force them to make ineffective use of resources, generating waste or overhead.

Waste is in this context defined as activities that consume time, money or space without producing any type of relevant customer value, as opposed to overhead which is in this context defined as the efforts put on activities that can be avoided by improving the way of performing them. However, for software development organizations the separation between waste and overhead is less obvious, and its detection and management is challenging [67]. For example, there exist activities that do not directly produce business value, such as architectural improvements to ensure flexibility and maintainability [67]. Another example of overhead is extensive intra and inter-team communication. The difficulty is that overhead can often be mistaken for waste, and when removed introduces even more waste e.g. misunderstandings in development teams due to lack of communication resulting in the actual introduction of waste [118].

During product inception, the time pressure restricts the ability to analyze potential requirements and to study the short term (e.g., customer fit), and long term (e.g., internal-business and architecture) consequences of including these requirements into a product. This can lead to investments in requirements analysis, prototyping and even customer tests for features that would not be a part of a product [66]. Even if included into the next release of a product, these features are often realized under time-pressure that forces a barely good-enough design during the realization stage, introducing new forms of waste, like Techincal Debt. Technical Debt is defined as a metaphor to explain the long-term consequences that sub-optimal design decisions have on the long term in software projects [119].

During the evolution stage, sub-optimal decisions cause test case prioritization issues, sub-optimal test coverage and code erosion that might propagate to testing artefacts [120]. The consequences are severe and include: lower efficiency of requirements processing and decision making, code and architectural erosion, or sub-optimal usage of testing resources.

Similarly, the misalignment between requirements engineering and testing activities introduces several types of waste both in agile and in plan-driven projects [81] during realization and evolution stages.

Therefore, there is a need to identify and characterize various types of waste and overhead during product inception, realization and evolution, and their relationship with the ability of organizations to create business and customer value. This sub-projects advocates introducing a value-driven holistic approach to waste and overhead identification and mitigation. This sub-project is going to integrate and extend previous research efforts that, although substantial, are mostly focusing only on isolated stages of the development process or only isolated artifacts.

SP4: Cognitive software engineering development models

Software engineering development models, i.e., processes, practices, and principles, are often considered in terms of what actions should be performed on what artefacts and by what part of the development organisation. For example, processes can be thought of as transforming input products to output products through the consumption of further products (such as guidelines), to advance towards a goal [123]. However, when the software artefacts are very large and complex, and actions need to be performed in parallel and span multiple parts of the organisation or even multiple organisations, rigid or badly designed development models can become an obstacle due to inefficient utilisation of organisational capabilities and mismatches between individual understanding and model assumptions. Furthermore, many development models do not consider motivational and other human factors that influence model understanding and enactment. Badly designed or enacted models may impede performance by, e.g., causing unnecessary cognitive load or disrupting communication.

Research on process modelling has demonstrated some of the cognitive mechanisms that are active when humans create and understand processes, and that influence process model quality [124]. It is not yet known how similar mechanisms may influence enactment of processes and other development models, but the relationship between the software development process and motivation has been documented [125].

This sub-project investigates new forms of software engineering development models which fit the characteristics of humans by considering human strengths and limitations. It also advances development models that include automation as an active component, freeing human resources to focus on creative rather than repetitive tasks. Such models are referred to here as cognitive software engineering development models. The models assume an organisational structure with loose coordination, emphasise overcoming cognitive limitations of humans both through characteristics of the models themselves as well as through automation, allow more effective use of human resources, are designed to be motivational, and emphasise product value as a basis for decision-making. The models can differ in level of detail and can range from micro-models intended for individuals, to overarching, cross-organisational development frameworks.

Lean and agile as well as Open Source approaches to software development include several elements related to human factors, and form the starting point for this sub-project. However, there is a need to advance the state of the art beyond these approaches to enable increased performance and competitiveness in software organisations. This sub-project provides base technological research to the other sub-projects in this profile regarding human aspects of development model design, enactment, and execution on the individual, team, as well as organisational levels.

SP5: Study and Improve LeaGile handling of organizational and team interfaces.

Many software organisations strive to increase competitiveness by increasing their ability to flexibly adapt to changing market conditions. Agile and lean software development strives to enable such flexibility, but a fundamental challenge of agile and lean organizations developing software-intensive products is that the concept of close team collaboration and joint ownership of a product has issues with scaling [19], [126]. In a company developing a large software-intensive product or service it is impossible to put all stakeholders into one team. The way in which the teams are arranged is a crucial success factor for the organisation and its products. Thus, interfaces are created even between agile teams that require coordination [19] . In addition to making the development organisation agile, a further challenge is to consider and design the delivery of the product at an early stage. DevOps addresses this challenge by extending the cross-functionality of teams to include operations, creating additional needs for interfaces between teams and organisational functions, and their counterparts in the software and the technical deployment environment. Moreover, this team structure and the cross-team interfaces need to be aligned with the software architecture, following the socio-technical congruence principle (e.g., [[50], [79], [127]), to enhance the ability of the organization to create value while minimizing waste and overhead [79]. Failing in creation and coordination of the teams, in terms of experience and cross-functionality, might lead to the introduction of waste and overhead, e.g., in the form of effort spent by teams developing software that must be re-written by more senior developers and architects, or duplication of functionality between teams.

This sub-project addresses the challenge of designing organisational and team interfaces, and designing matching technical structures in the actual software architectures, in the context of rapid, data- and value-focused software engineering. In an attempt to take agile and its refinements like DevOps and SAFE to the next level this project saims more explicitly looking at issues of coordination interfaces, utilizing the lean concepts of Value creation and Waste removal.

SP6: Verification of Software Requirements in Dynamic, Complex and Regulated Markets

New and evolving legislations and regulations are growing concerns in companies developing software intensive systems due to the costs associated with making their products compliant [128]. Regulatory requirements as well as most requirements specifications in industry are written, to a large extent, in natural language [129]. One potential reason for this is that natural language (NL) specifications are easy to comprehend without training (except in the particular domain), and therefore immediately accessible by any stakeholder. At the same time, NL is also inherently imprecise and ambiguous, which causes impediments especially in verifying software compliance [nekvi2015].

In regulated markets, compliance and the ability to adapt to changes in a flexible manner is key to remain competitive and sometimes even required to be allowed to participate in the market [zeni2015]. However, compliance analysis and adoption is still primarily performed with practices that lack scalability and little automated support exists to acquire, analyse and prioritize information required to achieve compliance with legislations and regulations [130], [131].

[1] A. Haghighatkhah, A. Banijamali, O.-P. Pakanen, M. Oivo, and P. Kuvaja, “Automotive software engineering: A systematic mapping study,” J. Syst. Softw., vol. 128, pp. 25–55, 2017.

[2] T. Gorscheck, “The evolution towards soft(er) products – Ten challenges of old still new,” Commun. ACM, vol. In print, 2017.

[3] J. Bosch, “Speed, data, and ecosystems,” IEEE Softw., vol. 33, no. 1, pp. 82–88, Jan. 2016.

[4] M. Khurum, T. Gorschek, and M. Wilson, “The software value map – An exhaustive collection of value aspects for the development of software intensive products,” J. Softw. Evol. Process, vol. 25, no. 7, pp. 711–741, Jul. 2013.

[5] M. Unterkalmsteiner, T. Gorschek, R. Feldt, and N. Lavesson, “Large-scale information retrieval in software engineering – an experience report from industrial application,” Empir. Softw. Eng., vol. 21, no. 6, pp. 2324–2365, Dec. 2016.

[6] M. V. Kosti, R. Feldt, and L. Angelis, “Archetypal personalities of software engineers and their work preferences: a new perspective for empirical studies,” Empir. Softw. Eng., vol. 21, no. 4, pp. 1509–1532, 2016.

[7] L. Northrop et al., Ultra-Large-Scale Systems: The Software Challenge of the Future. Software Engineering Institute, Carnegie Mellon University, 2006.

[8] F. P. Brooks, The Mythical Man-Month. Addison-Wesley, 1975.

[9] Jan Bosch, Speed, Data, and Ecosystems: Excelling in a Software-Driven World. CRC Press, Taylor and Francis Group, 2016.

[10] B. Fitzgerald and K. J. Stol, “Continuous software engineering: A roadmap and agenda,” J. Syst. Softw., vol. 123, pp. 176–189, 2017.

[11] R. Parasuraman, T. B. Sheridan, and C. D. Wickens, “A model for types and levels of human interaction with automation,” IEEE Trans. Syst. Man, Cybern. – Part A Syst. Humans, vol. 30, no. 3, pp. 286–297, May 2000.

[12] K. Wiklund, S. Eldh, D. Sundmark, and K. Lundqvist, “Technical debt in test automation,” in IEEE 5th International Conference on Software Testing, Verification and Validation, 2012, pp. 887–892.

[13] R. Parasuraman and V. Riley, “Humans and Automation: Use, Misuse, Disuse, Abuse,” Hum. Factors J. Hum. Factors Ergon. Soc., vol. 39, no. 2, pp. 230–253, Jun. 1997.

[14] J. D. Lee and K. A. See, “Trust in Automation: Designing for Appropriate Reliance,” Hum. Factors J. Hum. Factors Ergon. Soc., vol. 46, no. 1, pp. 50–80, Jan. 2004.

[15] B. Marculescu, S. Poulding, R. Feldt, K. Petersen, and R. Torkar, “Tester interactivity makes a difference in search-based software testing: A controlled experiment,” Inf. Softw. Technol., vol. 78, pp. 66–82, Oct. 2016.

[16] M. Harman and B. F. Jones, “Search-based software engineering,” Inf. Softw. Technol., vol. 43, no. 14, pp. 833–839, Dec. 2001.

[17] B. Marculescu, R. Feldt, and R. Torkar, “A concept for an interactive search-based software testing system,” in International Symposium on Search Based Software Engineering, 2012, vol. 7515 LNCS, pp. 273–278.

[18] C. Le Goues, S. Forrest, and W. Weimer, “Current challenges in automatic software repair,” Softw. Qual. J., vol. 21, no. 3, pp. 421–443, Sep. 2013.

[19] T. Dingsøyr and N. B. Moe, “Research challenges in large-scale agile software development,” ACM SIGSOFT Softw. Eng. Notes, vol. 38, no. 5, p. 38, 2013.

[20] M. Kuhrmann et al., “Hybrid software and system development in practice: waterfall, scrum, and beyond,” in International Conference on Software and System Process, 2017, pp. 30–39.

[21] M. Poppendieck and T. Poppendieck, Lean Software Development: An Agile Toolkit. Addison-Wesley, 2003.

[22] P. Gregory, L. Barroca, H. Sharp, A. Deshpande, and K. Taylor, “The challenges that challenge: Engaging with agile practitioners’ concerns,” Inf. Softw. Technol., vol. 75, pp. 26–38, 2016.

[23] J. T. Klein, Interdisciplinarity: History, theory, and practice. Wayne State University Press, 1990.

[24] D. E. Perry, A. A. Porter, and L. G. Votta, “Empirical studies of software engineering,” in Conference on The future of Software Engineering, 2000, pp. 345–355.

[25] P. Lenberg, R. Feldt, and L. G. Wallgren, “Human Factors Related Challenges in Software Engineering–An Industrial Perspective,” in IEEE/ACM 8th International Workshop on Cooperative and Human Aspects of Software Engineering, 2015, pp. 43–49.

[26] R. N. Rapoport, “Three Dilemmas in Action Research,” Hum. Relations, vol. 23, no. 6, pp. 499–513, 1970.

[27] M. Hult and S. Lennung, “Towards a Definition of Action Research: a Note and Bibliography,” J. Manag. Stud., vol. 17, no. 2, pp. 241–250, 1980.

[28] L. Briand, D. Bianculli, S. Nejati, F. Pastore, and M. Sabetzadeh, “The Case for Context-Driven Software Engineering Research: Generalizability Is Overrated,” IEEE Softw., vol. 34, no. 5, pp. 72–75, Jan. 2017.

[29] F. Siedlok and P. Hibbert, “The Organization of Interdisciplinary Research: Modes, Drivers and Barriers,” Int. J. Manag. Rev., vol. 16, no. 2, pp. 194–210, Apr. 2014.

[30] S. S. Bajwa, C. Gencel, and P. Abrahamsson, “Software Product Size Measurement Methods: A Systematic Mapping Study,” in Joint Conference of the International Workshop on Software Measurement and the International Conference on Software Process and Product Measurement, 2014, pp. 176–190.

[31] M. Weber and J. Weisbrod, “Requirements engineering in automotive development: Experiences and challenges,” IEEE Softw., vol. 20, no. 1, pp. 16–24, Jan. 2003.

[32] B. Regnell, R. B. Svensson, and K. Wnuk, “Can we beat the complexity of very large-scale requirements engineering?,” in International Working Conference on Requirements Engineering: Foundation for Software Quality, 2008, vol. 5025 LNCS, pp. 123–128.

[33] B. H. C. Cheng et al., “Software Engineering for Self-Adaptive Systems: A Research Roadmap,” in Software Engineering for Self-Adaptive Systems, Springer, 2009, pp. 1–26.

[34] B. Boehm, “Some future trends and implications for systems and software engineering processes,” Syst. Eng., vol. 9, no. 1, pp. 1–19, 2006.

[35] S. Konrad and M. Gall, “Requirements Engineering in the Development of Large-Scale Systems,” in IEEE International Requirements Engineering Conference, 2008, pp. 217–222.

[36] R. Baskerville, B. Ramesh, L. Levine, J. Pries-Heje, and S. Slaughter, “Is Internet-Speed Software Development Different?,” IEEE Software, vol. 20, no. 6. pp. 70–77, Nov-2003.

[37] W. C. Shih, “Does Hardware Even Matter Anymore?,” https://hbr.org/2015/06/does-hardware-even-matter-anymore, 2015. .

[38] J. C. Mogul, “Emergent (mis)behavior vs. complex software systems,” ACM SIGOPS Oper. Syst. Rev., vol. 40, no. 4, Oct. 2006.

[39] F. D. Macías-Escrivá, R. Haber, R. Del Toro, and V. Hernandez, “Self-adaptive systems: A survey of current approaches, research challenges and applications,” Expert Systems with Applications, vol. 40, no. 18. Pergamon, pp. 7267–7279, 2013.

[40] H. V. D. Parunak and S. A. Brueckner, “Software Engineering for Self-Organizing Systems,” Challenges Agent-Oriented Softw. Eng., vol. 30, no. 14, pp. 419–434, Sep. 2015.

[41] D. Damian, “Stakeholders in Global Requirements Engineering: Lessons Learned from Practice,” IEEE Softw., vol. 24, no. 2, pp. 21–27, Mar. 2007.

[42] L. Chung and J. C. S. do Prado Leite, “On Non-Functional Requirements in Software Engineering,” in Conceptual Modeling: Foundations and Applications, Springer, 2009, pp. 363–379.

[43] S. Biffl, A. Aurum, B. Boehm, H. Erdogmus, and P. Grünbacher, Value-based software engineering. Springer, 2006.

[44] W. Afzal, R. Torkar, and R. Feldt, “A systematic review of search-based testing for non-functional system properties,” Inf. Softw. Technol., vol. 51, no. 6, pp. 957–976, 2009.

[45] B. Boehm, “Value-based software engineering: reinventing,” SIGSOFT Softw. Eng. Notes, vol. 28, no. 2, Mar. 2003.

[46] P. Lenberg, R. Feldt, and L. G. Wallgren, “Behavioral software engineering: A definition and systematic literature review,” J. Syst. Softw., 2015.

[47] H. Simon, “A Behavioral Model of Rational Choice,” Q. J. Econ., 1955.

[48] D. Kahneman and A. Tversky, “Prospect Theory,” Econometrica, 1979.

[49] A. Tversky and D. Kahneman, “The framing of decisions and the psychology of choice,” Science (80-. )., vol. 211, no. 4481, pp. 453–458, 1981.

[50] J. D. Herbsleb, “Global software engineering: The future of socio-technical coordination,” in FoSE 2007: Future of Software Engineering, 2007, pp. 188–198.

[51] D. A. Tamburri, R. De Boer, E. Di Nitto, P. Lago, and H. van Vliet, “Dynamic networked organizations for software engineering,” in International Workshop on Social Software Engineering -, 2013.

[52] A. Fuggetta and E. Di Nitto, “Software process,” in Conference on Future of Software Engineering, 2014, pp. 1–12.

[53] F. F. Costa, “Big data in biomedicine,” Drug Discovery Today, vol. 19, no. 4. Elsevier Current Trends, pp. 433–440, 2014.

[54] A. Bell, G., Hey, T., and Szalay, “Beyond the Data Deluge,” Science (80-. )., vol. 323, no. 5919, pp. 1297–1298, 2009.

[55] A. Jain et al., “Commentary: The materials project: A materials genome approach to accelerating materials innovation,” APL Materials, vol. 1, no. 1. American Institute of Physics, p. 11002, Jul-2013.

[56] T. Hey, S. Tansley, and K. Tolle, The Fourth Paradigm: Data-Intesive Scientific Discovery. Microsoft Research, 2009.

[57] F. Provost and T. Fawcett, “Data Science and its Relationship to Big Data and Data-Driven Decision Making,” Big Data, vol. 1, no. 1, pp. 51–59, Mar. 2013.

[58] A. Begel and T. Zimmermann, “Analyze this! 145 questions for data scientists in software engineering,” in ACM/IEEE International Conference on Software Engineering, 2014, pp. 12–23.

[59] B. M. Gudipati, S. Rao, N. D. Mohan, and N. K. Gajja, “Big Data: Testing Approach to Overcome Quality Challenges structured testing technique,” Infosys Labs Briefings, vol. 11, no. 1, pp. 65–73, 2013.

[60] I. H. Witten, E. Frank, M. A. Hall, and C. J. Pal, Data Mining: Practical machine learning tools and techniques. Morgan Kaufmann, 2016.

[61] D. E. O’Leary, “Artificial intelligence and big data,” IEEE Intell. Syst., vol. 28, no. 2, pp. 96–99, 2013.

[62] J. Kennedy, “Particle swarm optimization,” in Encyclopedia of machine learning, Springer, 2011, pp. 760–766.

[63] P. Collopy and R. Horton, “Value Modeling for Technology Evaluation,” in AIAA/ASME/SAE/ASEE Joint Propulsion Conference & Exhibit, 2002.

[64] H. L. McManus, A. Haggerty, and E. Murman, “Lean engineering: a framework for doing the right thing right,” Aeronaut. J., vol. 111, no. 1116, pp. 105–114, 2007.

[65] H. Alahyari, R. Berntsson Svensson, and T. Gorschek, “A study of value in agile software development organizations,” J. Syst. Softw., vol. 125, pp. 271–288, 2017.

[66] K. Wnuk, T. Gorschek, D. Callele, E. A. Karlsson, E. Åhlin, and B. Regnell, “Supporting Scope Tracking and Visualization for Very Large-Scale Requirements Engineering-Utilizing FSC+, Decision Patterns, and Atomic Decision Visualizations,” IEEE Trans. Softw. Eng., vol. 42, no. 1, pp. 47–74, 2016.

[67] M. Khurum, K. Petersen, and T. Gorschek, “Extending value stream mapping through waste definition beyond customer perspective,” J. Softw. Evol. Process, vol. 26, no. 12, pp. 1074–1105, Dec. 2014.

[68] P. Kashfi, A. Nilsson, and R. Feldt, “Integrating User eXperience Practices into Software Development Processes : The implication of Subjectivity and Emergent nature of UX,” PeerJ Comput. Sci. (in submission), vol. 1, no. May, pp. 1–29, 2016.

[69] K. Hoffman, K. Wnuk, and D. Callele, “On the facets of stakeholder inertia: A literature review,” in 2014 IEEE 8th International Workshop on Software Product Management (IWSPM), 2014, pp. 31–37.

[70] C. Wohlin, D. Šmite, and N. B. Moe, “A general theory of software engineering: Balancing human, social and organizational capitals,” J. Syst. Softw., vol. 109, pp. 229–242, 2015.

[71] D. Šmite, N. B. Moe, A. Šāblis, and C. Wohlin, “Software teams and their knowledge networks in large-scale software development,” Inf. Softw. Technol., vol. 86, pp. 71–86, 2017.

[72] L. Gren, R. Torkar, and R. Feldt, “Group development and group maturity when building agile teams: A qualitative and quantitative investigation at eight large companies,” J. Syst. Softw., vol. 124, pp. 104–119, 2017.

[73] F. Fagerholm, M. Ikonen, P. Kettunen, J. Münch, V. Roto, and P. Abrahamsson, “Performance Alignment Work: How software developers experience the continuous adaptation of team performance in Lean and Agile environments,” in Information and Software Technology, 2015, vol. 64, pp. 132–147.

[74] M. V. Kosti, R. Feldt, and L. Angelis, “Archetypal personalities of software engineers and their work preferences: a new perspective for empirical studies,” Empir. Softw. Eng., vol. 21, no. 4, pp. 1509–1532, Aug. 2016.

[75] D. Graziotin, F. Fagerholm, X. Wang, and P. Abrahamsson, “On the Unhappiness of Software Developers,” in International Conference on Evaluation and Assessment in Software Engineering, 2017, pp. 324–333.

[76] J. Börstler, M. E. Caspersen, and M. Nordström, “Beauty and the Beast: on the readability of object-oriented example programs,” Softw. Qual. J., vol. 24, no. 2, pp. 231–246, 2016.

[77] J. Börstler et al., “‘I Know It when I See It’: Perceptions of Code Quality,” in Proceedings of the 2017 ACM Conference on Innovation and Technology in Computer Science Education, 2017, p. 389.

[78] J. Borstler and B. Paech, “The Role of Method Chains and Comments in Software Readability and Comprehension-An Experiment,” IEEE Trans. Softw. Eng., vol. 42, no. 9, pp. 886–898, 2016.

[79] S. Betz et al., “An evolutionary perspective on socio-technical congruence: The rubber band effect,” Int. Work. Replication Empir. Softw. Eng. Res., pp. 15–24, Oct. 2013.

[80] J. K. Martinsen, H. Grahn, and A. Isberg, “Heuristics for thread-level speculation in Web applications,” IEEE Comput. Archit. Lett., vol. 13, no. 2, pp. 77–80, Jul. 2014.

[81] M. Unterkalmsteiner, T. Gorschek, R. Feldt, and E. Klotins, “Assessing requirements engineering and software test alignment – Five case studies,” J. Syst. Softw., vol. 109, pp. 62–77, 2015.

[82] R. Jabangwe, D. Šmite, and E. Hessbo, “Distributed software development in an offshore outsourcing project: A case study of source code evolution and quality,” Inf. Softw. Technol., vol. 72, pp. 125–136, 2016.

[83] R. Jabangwe, K. Petersen, and D. Šmite, “Visualization of defect inflow and resolution cycles: Before, during and after transfer,” in Proceedings – Asia-Pacific Software Engineering Conference, APSEC, 2013, vol. 1, pp. 289–298.

[84] K. Petersen et al., “Choosing Component Origins for Software Intensive Systems: In-house, COTS, OSS or Outsourcing? — A Case Survey,” IEEE Trans. Softw. Eng., pp. 1–1, 2017.

[85] C. Wohlin, K. Wnuk, D. Smite, U. Franke, D. Badampudi, and A. Cicchetti, “Supporting strategic decision-making for selection of software assets,” in 7th International Conference on Software Business, 2016, vol. 240, pp. 1–15.

[86] M. Borg, I. Lennerstad, R. Ros, and E. Bjarnason, “On Using Active Learning and Self-training when Mining Performance Discussions on Stack Overflow,” in International Conference on Evaluation and Assessment in Software Engineering, 2017, pp. 308–313.

[87] M. Unterkalmsteiner and T. Gorschek, “Process Improvement Archaeology – What led us here and what’s next?,” IEEE Softw., vol. In print, 2017.

[88] M. Unterkalmsteiner and T. Gorschek, “Requirements quality assurance in industry: Why, what and how?,” in International Working Conference on Requirements Engineering: Foundation for Software Quality, 2017, vol. 10153 LNCS, pp. 77–84.

[89] H. Femmer, M. Unterkalmsteiner, and T. Gorschek, “Which Requirements Artifact Quality Defects are Automatically Detectable? A Case Study,” in IEEE International Requirements Engineering Conference Workshops, 2017, pp. 400–406.

[90] K. Wnuk, T. Gorschek, and S. Zahda, “Obsolete Software Requirements,” Inf. Softw. Technol., vol. 55, no. 6, pp. 921–940, Jun. 2013.

[91] E. Alégroth, Z. Gao, R. Oliveira, and A. Memon, “Conceptualization and evaluation of component-based testing unified with visual GUI testing: An empirical study,” in 8th International Conference on Software Testing, Verification and Validation, 2015.

[92] R. Feldt and S. Poulding, “Broadening the Search in Search-Based Software Testing: It Need Not Be Evolutionary,” in Proceedings – 8th International Workshop on Search-Based Software Testing, SBST 2015, 2015, pp. 1–7.

[93] K. Wnuk and K. C. Maddila, “Agile and lean metrics associated with requirements engineering,” in IWSM Mensura ’17, 2017.

[94] M. Svahnberg and T. Gorschek, “A model for assessing and re-assessing the value of software reuse,” J. Softw. Evol. Process, vol. 29, no. 4, 2017.

[95] F. Fagerholm, A. Sanchez Guinea, H. Mäenpää, and J. Münch, “The RIGHT model for Continuous Experimentation,” J. Syst. Softw., 2017.

[96] E. Alégroth and R. Feldt, “On the long-term use of visual gui testing in industrial practice: a case study,” Empir. Softw. Eng., vol. 22, no. 6, pp. 2937–2971, 2017.

[97] B. Kitchenham et al., “Guidelines for performing Systematic Literature Reviews in Software Engineering,” EBSE Technical Report, Keele University, UK, 2007.

[98] C. Wohlin, “Guidelines for snowballing in systematic literature studies and a replication in software engineering,” in International Conference on Evaluation and Assessment in Software Engineering, 2014, pp. 1–10.

[99] J. Itkonen, M. V Mäntylä, and C. Lassenius, “The role of the tester’s knowledge in exploratory software testing,” IEEE Trans. Softw. Eng., vol. 39, no. 5, pp. 707–724, 2013.

[100] S. Berner, R. Weber, and R. K. Keller, “Observations and lessons learned from automated testing,” in Software Engineering, 2005. ICSE 2005. Proceedings. 27th International Conference on, 2005, pp. 571–579.

[101] G. Fraser, M. Staats, P. McMinn, A. Arcuri, and F. Padberg, “Does automated white-box test generation really help software testers?,” in Proceedings of the 2013 International Symposium on Software Testing and Analysis, 2013, pp. 291–301.

[102] B. Marculescu, R. Feldt, and R. Torkar, “Objective Re-weighting to Guide an Interactive Search Based Software Testing System,” in 2013 12th International Conference on Machine Learning and Applications, 2013, pp. 102–107.

[103] E. Alégroth, J. Gustafsson, H. Ivarsson, and R. Feldt, “Replicating Rare Software Failures with Exploratory Visual GUI Testing,” IEEE Softw., vol. 34, no. 5, pp. 53–59, 2017.

[104] S. L. Lim, A. Finkelstein, Soo Ling Lim, and A. Finkelstein, “StakeRare: Using social networks and collaborative filtering for large-scale requirements elicitation,” IEEE Trans. Softw. Eng., vol. 38, no. 3, pp. 707–735, May 2012.

[105] E. C. Groen, J. Doerr, and S. Adam, “Towards Crowd-Based Requirements Engineering A Research Preview,” in International Working Conference on Requirements Engineering: Foundation for Software Quality, S. A. Fricker and K. Schneider, Eds. Cham: Springer International Publishing, 2015, pp. 247–253.

[106] B. Regnell and S. Brinkkemper, “Market-driven requirements engineering for software products,” Eng. Manag. Softw. Requir. Berlin Heidelb., pp. 287–308, 2005.

[107] H. A. Simon, The New Science of Management Decision, vol. 22, no. 2. Prentice-Hall, 1960.

[108] P. Laurent, J. Cleland-Huang, and C. Duan, “Towards Automated Requirements Triage,” in 15th IEEE International Requirements Engineering Conference (RE 2007), 2007, pp. 131–140.

[109] R. Gacitua, P. Sawyer, and V. Gervasi, “On the Effectiveness of Abstraction Identification in Requirements Engineering,” in 2010 18th IEEE International Requirements Engineering Conference, 2010, pp. 5–14.

[110] C. Potts, “Invented requirements and imagined customers: requirements engineering for off-the-shelf software,” in Requirements Engineering, 1995., Proceedings of the Second IEEE International Symposium on, 1995, pp. 128–130.

[111] D. Pagano and B. Bruegge, “User involvement in software evolution practice: A case study,” in 35th International Conference on Software Engineering (ICSE), 2013, pp. 953–962.

[112] W. Maalej, M. Nayebi, T. Johann, and G. Ruhe, “Toward data-driven requirements engineering,” IEEE Softw., vol. 33, no. 1, pp. 48–54, Jan. 2016.

[113] T. Johann and W. Maalej, “Democratic mass participation of users in Requirements Engineering?,” in 2015 IEEE 23rd International Requirements Engineering Conference, RE 2015 – Proceedings, 2015, pp. 256–261.

[114] D. C. Brabham, “Crowdsourcing as a Model for Problem Solving: An Introduction and Cases,” Converg. Int. J. Res. into New Media Technol., vol. 14, no. 1, pp. 75–90, Feb. 2008.

[115] H. Meth, M. Brhel, and A. Maedche, “The state of the art in automated requirements elicitation,” Information and Software Technology, vol. 55, no. 10. Elsevier, pp. 1695–1709, 2013.

[116] M. I. Jordan and T. M. Mitchell, “Machine learning: Trends, perspectives, and prospects,” Science. 2015.

[117] S. Amershi, M. Cakmak, W. B. Knox, and T. Kulesza, “Power to the People: The Role of Humans in Interactive Machine Learning,” AI Mag., 2014.

[118] T. Sedano, P. Ralph, and C. Peraire, “Software Development Waste,” in IEEE/ACM International Conference on Software Engineering, 2017, pp. 130–140.

[119] W. Cunningham, “The WyCash portfolio management system,” Proc. Object-Oriented Program. Syst. Lang. Appl. (OOPSLA ’92), vol. 4, no. 2, pp. 29–30, Apr. 1993.

[120] E. Alégroth and J. Gonzalez-Huerta, “Towards a Mapping of Software Technical Debt onto Testware,” in 43rd Euromicro Conference on Software Engineering and Advanced Applications, 2017, pp. 404–411.

[121] K. Petersen and C. Wohlin, “Measuring the Flow in Lean Software Development,” Softw. Pr. Exper., vol. 41, no. 9, pp. 975–996, Aug. 2011.

[122] M. Feyh and K. Petersen, “Lean Software Development Measures and Indicators – A Systematic Mapping Study,” in Lean Enterprise Software and Systems: 4th International Conference, LESS 2013, Galway, Ireland, December 1-4, 2013, Proceedings, B. Fitzgerald, K. Conboy, K. Power, R. Valerdi, L. Morgan, and K.-J. Stol, Eds. Berlin, Heidelberg: Springer Berlin Heidelberg, 2013, pp. 32–47.

[123] J. Münch, O. Armbrust, M. Kowalczyk, and M. Soto, Software Process Definition and Management. Springer, 2012.

[124] M. Martini, J. Pinggera, M. Neurauter, P. Sachse, M. R. Furtner, and B. Weber, “The impact of working memory and the process of process modelling on model quality: Investigating experienced versus inexperienced modellers,” Sci. Rep., vol. 6, 2016.

[125] S. Beecham, N. Baddoo, T. Hall, H. Robinson, and H. Sharp, “Motivation in Software Engineering: A systematic literature review,” Inf. Softw. Technol., vol. 50, no. 9–10, pp. 860–878, 2008.

[126] K. Dikert, M. Paasivaara, and C. Lassenius, “Challenges and success factors for large-scale agile transformations: A systematic literature review,” J. Syst. Softw., vol. 119, pp. 87–108, 2016.

[127] M. E. Conway, “How do committees invent,” Datamation, vol. 14, no. 4, pp. 28–31, 1968.

[128] M. R. I. Nekvi and N. H. Madhavji, “Impediments to Regulatory Compliance of Requirements in Contractual Systems Engineering Projects,” ACM Trans. Manag. Inf. Syst., vol. 5, no. 3, pp. 1–35, Dec. 2014.

[129] M. Kassab, C. Neill, and P. Laplante, “State of practice in requirements engineering: contemporary data,” Innov. Syst. Softw. Eng., vol. 10, no. 4, pp. 235–241, Dec. 2014.

[130] P. Varga et al., “Making system of systems interoperable–The core components of the arrowhead framework,” J. Netw. Comput. Appl., vol. 81, pp. 85–95, 2017.

[131] N. Zeni, N. Kiyavitskaya, L. Mich, J. R. Cordy, and J. Mylopoulos, “GaiusT: supporting the extraction of rights and obligations for regulatory compliance,” Requir. Eng., vol. 20, no. 1, pp. 1–22, Mar. 2015.

[132] R. Saavedra, L. Ballejos, and M. Ale, “Software Requirements Quality Evaluation: State of the art and research challenges,” in Argentine Symposium on Software Engineering, 2013.

[133] V. Pekar, M. Felderer, and R. Breu, “Improvement methods for software requirement specifications: A mapping study,” in 9th International Conference on the Quality of Information and Communications Technology, 2014, pp. 242–245.

[134] M. Borg and P. Runeson, “IR in Software Traceability: From a Bird’s Eye View,” in 2013 ACM / IEEE International Symposium on Empirical Software Engineering and Measurement, 2013, pp. 243–246.

[135] S. Lehnert, “A Review of Software Change Impact Analysis,” 2011.

[136] K. Alam, R. Ahmad, A. Akhunzada, M. Nasir, and S. Khan, “Impact analysis and change propagation in service-oriented enterprises: A systematic review,” Inf. Syst., vol. 54, pp. 43–73, Dec. 2015.

[137] R. K. Panesar-Walawege, M. Sabetzadeh, and L. Briand, “Supporting the verification of compliance to safety standards via model-driven engineering: Approach, tool-support and empirical validation,” Inf. Softw. Technol., vol. 55, no. 5, pp. 836–864, May 2013.

[138] T. Gorschek, C. Wohlin, P. Garre, and S. Larsson, “A Model for Technology Transfer in Practice,” IEEE Softw., vol. 23, no. 6, pp. 88–95, 2006.

[139] C. Wohlin et al., “The Success Factors Powering Industry-Academia Collaboration,” IEEE Softw., vol. 29, no. 2, pp. 67–73, Mar. 2012.